When I first started my career as a junior software developer, my team and I were tasked with optimizing the onboarding flow for a well-known website in Germany. We ran a lot of A/B tests to determine how we could increase the completion rate of the onboarding process and also encourage users to become more active on the platform.

At first, I really liked A/B testing. If you’re unfamiliar with A/B testing, the concept is pretty straightforward: you come up with an idea to improve an existing user experience, then divide your users into two groups (or more). One group (Group A) gets the original experience, and the other group (Group B) gets the new version. You run the test until enough users participate, and then analyze the results. For example, if Group B’s onboarding completion rate is 15% higher than Group A’s, you might decide the new version is better and roll out that version to all users.

When you think about it, it makes sense. You base decisions on data, and since you’re testing on only half of the users, you’re taking less risk. If things go wrong during the test, you can hold rolling out the new experience to everyone. Product managers and team leads can also measure their performance by showing how much they contributed to improving the product. It’s a win-win for everyone. And even if things go south later, you can always say, “But we did an A/B test and didn’t see any decrease,” so you’re off the hook for any negative outcomes.

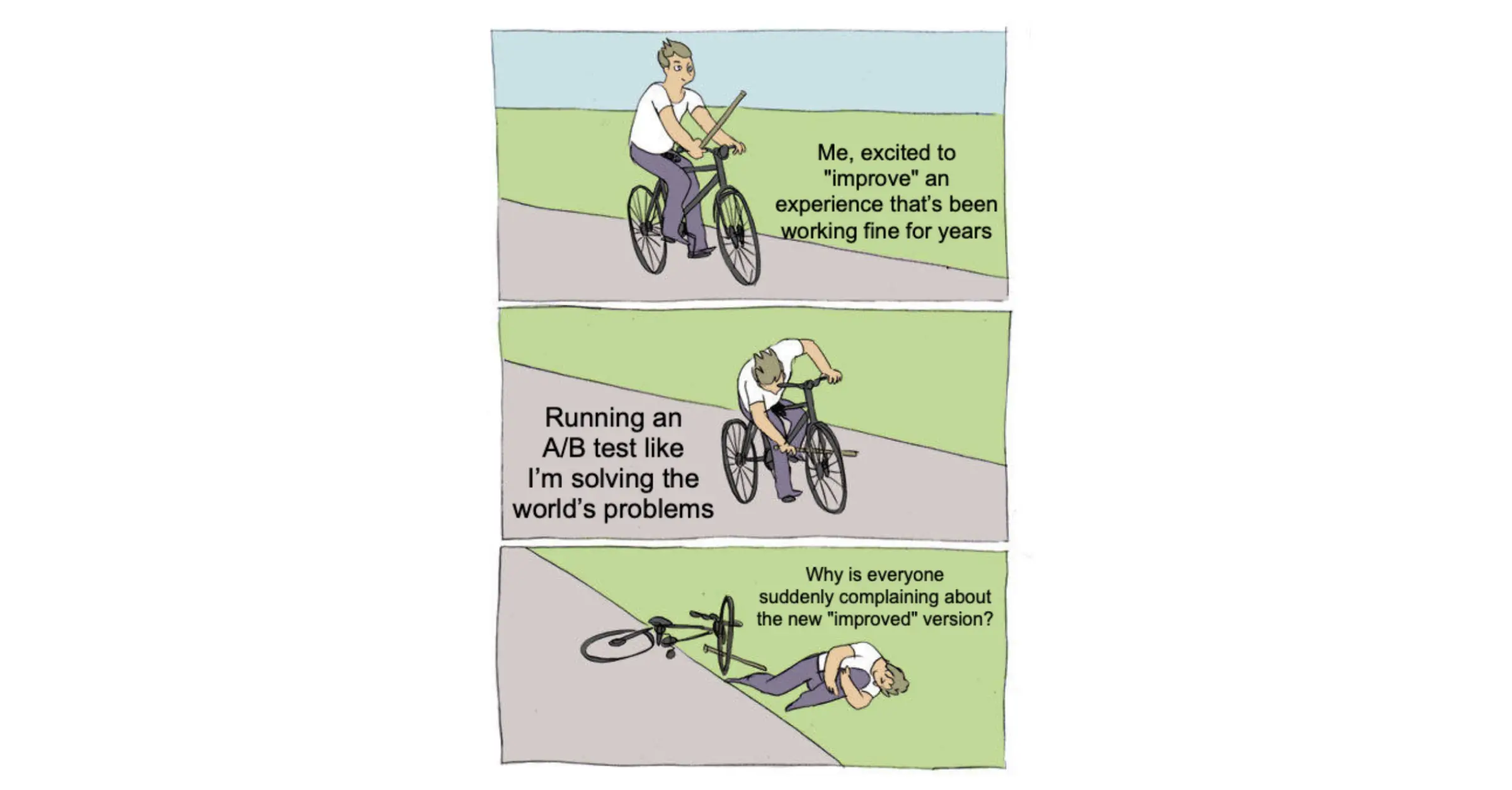

But as I gained more experience and ran more A/B tests, I began to realize something. Relying too heavily on numbers and tests can cause you to lose sight of the bigger picture—the overall user experience. Instead of solving real user problems, the focus becomes about optimizing metrics and chasing numbers. The quality of the experience takes a backseat, and decisions are driven more by data than by understanding how the product truly serves its users.

Enshittification

If you’re not familiar with the term “Enshittification,” here’s a definition from Wikipedia:

Enshittification, also known as crapification and platform decay, is the term used to describe the pattern in which online products and services decline in quality over time. Initially, vendors create high-quality offerings to attract users, then they degrade those offerings to better serve business customers, and finally degrade their services to users and business customers to maximize profits for shareholders.

Let me give you an example. Spotify began as a music streaming service. Over time, they added podcasts and audiobooks to the platform. Users who originally used Spotify just to listen to music began to notice that the app was becoming harder to navigate. They were constantly exposed to podcast or audiobook recommendations, and finding music became more difficult as the UI got cluttered.

Of course, Spotify does A/B testing, just like many other tech companies. The issue here is that when these changes were made, Spotify likely measured metrics like user engagement—how much time people spent in the app and how many actions they took. Naturally, if you make it harder for users to find their music, they might spend more time searching for it. So, the teams behind the changes could easily claim that the home screen redesign and the introduction of podcasts and audiobooks didn’t negatively impact engagement. In reality, even if it became more difficult to find the music you wanted, you’d still listen to it. These changes were rolled out to all users, but they didn’t measure things like frustration or the overall user experience. Since some users enjoyed the podcasts and audiobooks, the team could show positive results and claim success. And as a result, some of them likely got promoted and moved on to other projects.

I’ve witnessed similar changes with products I’ve worked on. For instance, I worked on a product that helped users track their packages (like shipped, out for delivery, etc.). The app didn’t have live tracking, the feature where you can see your package on a map in real-time. But the growth team decided to tweak the notification copy and include “live tracking” in the message. They ran an A/B test and saw an increase in app openings (big surprise there). They also checked if this led to a drop in the number of users enabling notifications. When they saw no decrease in notifications, they took it as a sign that the change was successful, without considering that people still wanted to know where their package was, even if the live tracking feature didn’t actually exist. They used A/B testing to boost their numbers without considering the long-term consequences. Some team members likely got promoted, users were frustrated by the lack of live tracking, and the overall experience worsened.

How A/B Testing Builds Confidence

When I first realized what the team was working on, I reached out to them and shared my concern. I told them that the changes they were making were wrong and would degrade the overall user experience. They disagreed with me, pointing to their data, while I had no numbers to back up my intuition. Yet, deep down, I knew these changes would create a negative experience with long-term consequences that couldn’t be captured through A/B testing.

Once people discover how to use A/B testing to justify their decisions, they’ll continue doing so. This is where A/B testing can give confidence to people who lack a solid understanding of user experience or design. When you challenge them on the negative impact of a decision, they can simply point to the results of A/B tests and dismiss any concerns, claiming they don’t see any issues.

The same individuals often start to use A/B testing in ways that focus on improving metrics, not addressing real user problems. For example, rather than solving core user pain points, they might suggest something like changing the button color to see if it has an impact on click rates. Even though you know this idea is flawed, the decision-maker can argue that you can’t be sure until it’s tested.

So, your entire team ends up working on experiments and adjustments that may not solve actual user issues but are designed to boost certain metrics. In the end, you may see a slight increase in button clicks, and the change gets rolled out. However, users begin to complain that the app’s interface is becoming harder to use, and they can’t find the features they once relied on. In many cases, since the person responsible for the decision has moved on to a different team or a higher position, they don’t revisit or reverse the changes. Alternatively, if there are new A/B tests on the horizon, they may just keep pushing forward with the changes, never addressing the real problems users are facing.

Thanks to A/B testing, it’s easy for people to showcase their success, making it easier for them to climb the corporate ladder. However, as they rise to decision-making positions, they often continue pushing the same data-driven approach without considering the broader user experience. Over time, this leads to the entshittification of products—where quality takes a backseat to short-term metrics.

In contrast, the most successful companies in tech have historically relied on strong design instincts, intuition, and a deep understanding of user needs rather than just data. As we continue to develop new products, it’s crucial that we strike a balance—using data to inform decisions, but never losing sight of the bigger picture: creating intuitive, user-centered experiences. Only by prioritizing true quality over shallow metrics can we prevent our favorite tools and platforms from falling into the trap of enshittification.